“The scientist is not a person who gives the right answers, he’s one who asks the right questions.” ~ Claude Lévi-Strauss

If you don’t know the answer to the question in the title then you’re far from alone –please don’t google it just yet!

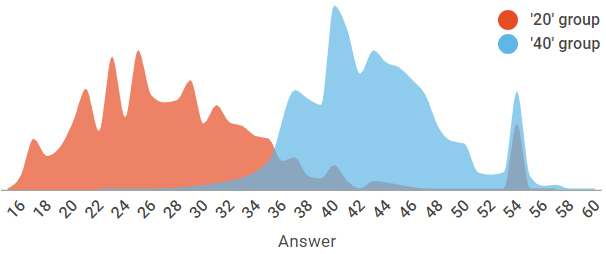

So, imagine you’re observing a study. There are two groups that are separately given the same task, to collectively decide whether the number of countries in Africa is more or less than a certain provided value. One group are asked “Are there more or less than 20 countries?” for the other group the provided number is 40. Lets look at how this is done and how the groups differed.

So the process for both groups was to have each person write down how many countries they think are in Africa without talking about it first; “to prevent your answer being influenced”. Then the results would be averaged and the group would then see if this answer was above or below the provided figure.

Hopefully you have already guessed, this test is not to gauge people’s understanding of geography. You possibly also spotted that despite what was said, the answers provided had very much been influenced by the structure of the test. Having people write their answers independently served to prevent those people who know the correct answer from swaying those who were uncertain. The test was to see how much the initially provided value (20 or 40) swayed the results of the group. Unsurprisingly, the group asked if the answer is more or less than 20 produced generally lower values than the ’40’ group.

You can see on the chart that there’s a spike around the correct answer which is significantly higher than either of the ‘supplied’ values.

This is an example of a leading question. How bias is introduced and how it influences the outcome. This can be as innocuous as “You’ll come to dinner tonight, won’t you?”, through a staple of charity collection: “Do you agree that we need to save the whales?” right up to push polling where political candidates seed opinion under the guise of surveying the electorate.

As analysts we need to keep aware of any bias as well as try and separate harmful biases from useful indicators – such as we might get from a subject matter expert sanity checking results. It is good to also be aware of how this can be used to drive desired results. For example this article in the Harvard Business Review shows how customer satisfaction surveying can increase customer engagement.

Identifying bias is a skill worth developing; whether to eliminate it or use it; though hopefully not to abuse it.